Big Data Quotes: Disruptive Innovation?

“By definition, big data cannot yield complicated descriptions of causality. Especially in healthcare. Almost all of our diseases occur in the intersections of systems in the body. For example, there is a drug that is marketed by Elan BioNeurology called TYSABRI. It was developed for MS [multiple sclerosis]. It turns out that of the people who have MS a proportion respond magnificently to TYSABRI. And others don’t. So what do you conclude from this? Is it just a mediocre drug? No. It is that there is one disease but it manifests itself in different ways. How does big data figure out what is the core of what is going on?”–Clayton Christensen

The OED, Big Data, and Crowdsourcing

The term “big data” was included in the most recent quarterly online update of the Oxford English Dictionary (OED). So now we have a most authoritative definition of what recently became big news: “data of a very large size, typically to the extent that its manipulation and management present significant logistical challenges.”

Beyond succinct definitions, the enchanting beauty of the OED, at least for those who love words and their history, lies in the collection of quotations illustrating the forms and uses of each word from the earliest known instance of its occurrence to more recent ones.

As someone who has been somewhat preoccupied with uncovering the historical antecedents for our present day usage of the term big data (see A Very Short History of Big Data), I was delightfully surprised to find out that the OED team has discovered that the earliest use of the term happened in 1980, seventeen years before the publication of the first paper in the ACM digital library to use (and define) “big data.” Sociologist Charles Tilly wrote in a 1980 working paper surveying “The old new social history and the new old social history” that “none of the big questions has actually yielded to the bludgeoning of the big-data people.” While the context is the increasing use of computer technology and statistical methods by historians, it is clear that Tilly used the term not to describe specifically the magnitude of the data but as a flourish of the pen following the words “big questions.” The meaning of the sentence would not change if he used only the word “data.”

While I’m quite sure that Tilly did not have in mind big data as it is defined by the OED itself, the context of his discussion is very relevant to today’s debates regarding big data and data science. In the section of the article from which the “big data” quote is taken, Tilly paraphrases the discussion in a 1979 paper by historian Lawrence Stone of the use of quantitative methods in historical research and attempts to make it a “science.”

Stone’s criticism of “cliometricians,” whose “special field is economic history,” reads like a description of the work of many “quants”—in Wall Street, academia, or government—in the forty-five years since he issued his warning: “[Their] great enterprises are necessarily the result of team-work, rather like building the pyramids: squads of diligent assistants assemble data, encode it, programme it, and pass it through the maw of the computer, all under the autocratic direction of a team-leader. The results cannot be tested by any of the traditional methods since the evidence is buried in private computer-tapes, not exposed in published footnotes. In any case the data are often expressed in so mathematically recondite a form that they are unintelligible to the majority of historical profession. The only reassurance to the bemused laity is that the members of this priestly order disagree fiercely and publicly about the validity of each other’s findings.”

Anticipating today’s doubts about the effectiveness of big data and concerns about the ratio of signal to noise, Stone concludes “in general, the sophistication of the methodology has tended to exceed the reliability of the data, while the usefulness of the results seem—up to a point—to be in inverse correlation to the mathematical complexity of the methodology and the grandiose scale of data-collection.” (For a recent enthusiastic embrace of the application of data science to the humanities and a rebuttal.

As Tilly hinted in the title to his paper, the new on many occasions is a very familiar old. Just scratch the surface and you find that the “revolution”—a word which we now tend to use liberally to describe any technological development—nicely delivers us to some place in the past while providing a soothing sense of moving forward. Indeed, the first sense of the word “revolution” in the OED is “The action or fact, on the part of celestial bodies, of moving around in an orbit or circular course” or simply “The return or recurrence of a point or period of time.”

Another word added to the OED online in the recent update affirms the notion that (almost) everything old is new again. While “crowdsourcing” was coined by Jeff Howe in 2006, this “new” (revolutionary?) practice launched the OED a century and a half ago:

In July 1857 a circular was issued by the ‘Unregistered Words Committee’ of the Philological Society of London, which had set up the Committee a few weeks earlier to organize the collection of material to supplement the best existing dictionaries. This circular, which was reprinted in various journals, asked for volunteers to undertake to read particular books and copy out quotations illustrating ‘unregistered’ words and meanings—items not recorded in other dictionaries—that could be included in the proposed supplement. Several dozen volunteers came forward, and the quotations began to pour in.

The volume of the “unregistered” material was such that in January 1858, The Philological Society decided that “efforts should be directed toward the compilation of a complete dictionary, and one of unprecedented comprehensiveness.” It took a while, but in April 1879, the newly-appointed editor James Murray issued an appeal to the public, asking for volunteers to read specific books in search of quotations to be included in the future dictionary. Within a year there were close to 800 volunteers and over the next three years, 3,500,000 quotation slips were received and processed by the OED team.

Was this the first big-data-crowdsourcing project?

Big Data Will Make IT the New Intel Inside

Tim O’Reilly famously declared in 2005: “Data is the next Intel Inside.” It well may be that big data—the organizational skill of using data as the key driver of performance—will make the IT function the new Intel Inside, the most strategic component of any organization.

Based on the success of Google, Amazon, and eBay at the time, O’Reilly correctly asserted that database management was a core competency of Web 2.0 companies and that “control over the database has led to market control and outsized financial returns.” The upcoming—and outsized—Facebook IPO is a testament to O’Reilly’s foresight, saying in 2005 that “data is the Intel Inside of [Web 2.0] applications, a sole source component in systems whose software infrastructure is largely open source or otherwise commodified.” Continue reading

The Big Data Interview: Sanjay Mirchandani, CIO, EMC

If data sits on a desk somewhere and is not being used, it’s an opportunity wasted

Sanjay Mirchandani believes IT has to take the lead in adding value to the business in the form of big data “addictive analytics.” Mirchandani is Chief Information Officer and COO, Global Centers of Excellence, at EMC Corporation. He has been recognized as one of Computerworld’s Premier 100 IT Leaders and Boston Business Journal’s CIOs of the Year. The following is an edited transcript of our recent phone conversation.

What would you say to a CIO who dismisses big data as just another buzzword?

I would say that for too long we have been trying to manage down information. The IT world that we have become comfortable with for many years was mostly within the enterprise, maybe connecting to some partners and customers. It was also mostly structured, basically revolving around transactional data. Today, the volume, variety, velocity and complexity of information have changed the IT landscape. These are the four things I challenge CIOs to really think about. We all know how to do structured information. But the moment you throw in unstructured and semi-structured information, life changes. This is where the value is for organizations today.

Does this also change the relationships between IT and the business?

Only IT has a complete picture of all the data in the enterprise. At the same time, IT today cannot have a monopoly on information. That changes the role and responsibilities of IT and the business. We in IT want to deliver more as a service and the business wants to consume more as a service. And IT and the business increasingly share tools and capabilities. For example, I can offer a tool like Greenplum Chorus, which is a community-based BI-data warehousing-analytics tool, where data scientists in IT work collaboratively with data scientists sitting in the business. If there’s something we can do better, we’ll take it on ourselves; if there’s something they can do better, like creating their own wrappers around the analytics, they will do it. What’s clear is that IT and the business have never been better aligned. Continue reading

The Data Science Interview: Mok Oh, PayPal

To Do Data Science, You Need a Team of Specialists

Currently the Chief Scientist at PayPal, Mok Oh came on board when eBay acquired WHERE, where he was Chief Innovation Officer. Prior to WHERE, Mok founded EveryScape, a data visualization company. The following is an edited transcript of our recent phone conversation.

How do you define a data scientist? Continue reading

Mingsheng Hong: The Data Scientist is the New Product Manager

Boston’s new data science-related meetup, The Data Scientist, got off to a great start yesterday with a presentation titled “The Scientist, The Team and The Purpose,” entertainingly delivered by Mingsheng Hong, Chief Data Scientist at Hadapt.

Continue readingWhat Has Steve Jobs Wrought?

Steve Jobs had an insanely great ride on the waves of digitization that have transformed the way we work and play over the last few decades. But taking a cursory look at the hundreds of tributes published to commemorate the anniversary of his passing, I was surprised to find lots of trees but not a single forest. The pig picture view of Jobs’ life is sorely missing.

We hear about a lot of specific things that he did or stimulated: He was “a genius toymaker,” a “genuine human being,” a “patent warrior.” He invented this, pushed for that, and denounced the other thing. All true. But wasn’t there something bigger that connected all the dots besides his creativity and drive?

Continue readingWhat Will Make You a Big Data Leader?

The IBM Institute for Business Value’s 2013 analytics survey surveyed 900 business and IT executives from 70 countries. “Leaders” (19% of the sample) were respondents self identified as “substantially outperforming their market or industry peers” in a question used by the IBM Institute for Business Value for years across a wide variety of surveys.

The full report is here

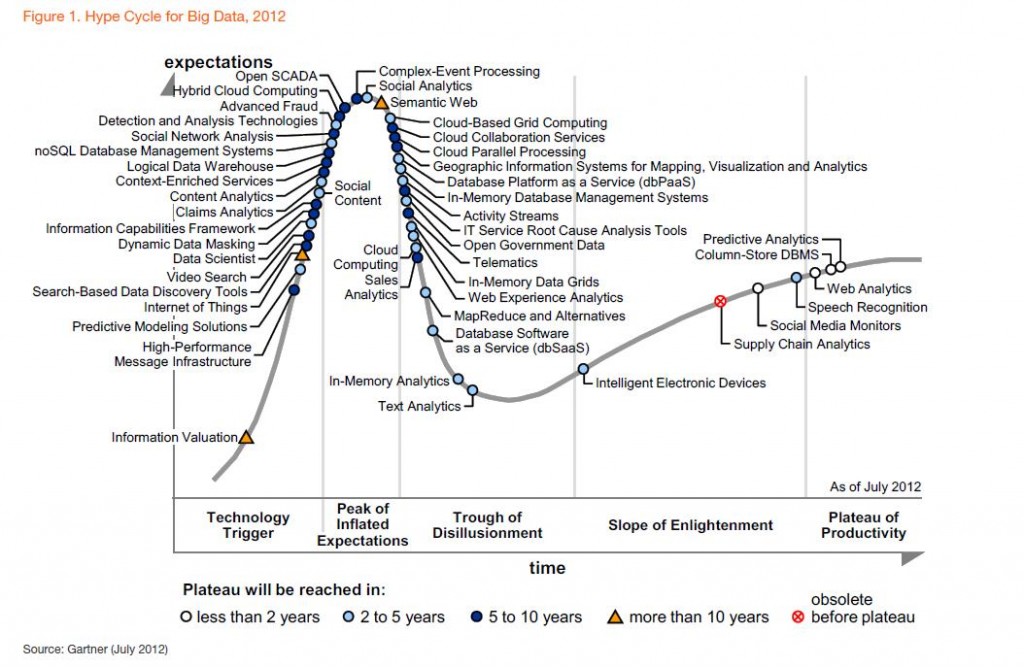

Gartner’s Hype Cycle for Big Data

Louis Columbus at Forbes.com surveys key big data forecasts and market size estimates, including Gartner’s recent Hype Cycle for Big Data. The winning technologies in the immediate future? “Column-Store DBMS, Cloud Computing, In-Memory Database Management Systems will be the three most transformational technologies in the next five years. Gartner goes on to predict that Complex Event Processing, Content Analytics, Context-Enriched Services, Hybrid Cloud Computing, Information Capabilities Framework and Telematics round out the technologies the research firm considers transformational.”

More on the report from Beth Schultz at AllAnalytics:

Gartner’s Hype Cycle is extremely crowded, with nearly 50 technologies represented on it. Many of them are clustered at what the firm calls the peak of inflated expectations, which it says indicates the high level of interest and experimentation in this area. As experimentation increases, many technologies will slide into the “trough of disillusionment,” as MapReduce, text analytics, and in-memory data grids have already done, the report says. This reflects the fact that, even though these technologies have been around for a while, their use as big-data technologies is a newer development.

Interestingly, Gartner says it doesn’t believe big-data will be a hyped term for too long. “Unlike other Hype Cycles, which are published year after year, we believe it is possible that within two to three years, the ability to address new sources and types, and increasing volumes of information will be ‘table stakes’ — part of the cost of entry of playing in the global economy,” the report says. “When the hype goes, so will the Hype Cycle.”